How To Troubleshoot a Broken RAID Volume On a QNAP Storage Device

Something like four weeks ago I had a major issue with my QNAP TS-459 Pro storage device. I did a simple firmware upgrade and whoop my RAID0 volume was gone, oops!

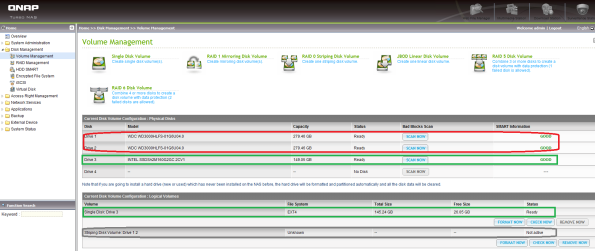

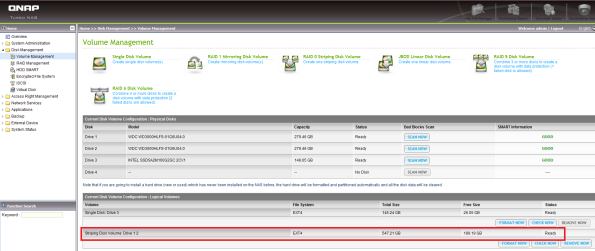

So I was left with three stand alone disks as shown on the screenshot above. The two WDC VelociRaptor disks were supposed to form a single RAID0 volume, well it used to be just before the firmware upgrade…

As an old saying goes, if your data is important backup once, if your data is critical backup twice. My data is important and I had everything rsync’ed on another QNAP TS-639 Pro storage device but still it is pain in the a***. By the way did you know that with the latest beta firmware, your QNAP device can copy your data to a SaaS (Storage as a Service) provider in the Cloud, cool isn’t it ![]()

It took me some time to recover my broken RAID0 volume and many trial and errors. Hopefully I had a backup and a lot of spare time thus I could play around with the device. Fist thing I tried is a restore of the latest known working backup of my QNAP storage device. The restore process went flawlessly but upon reboot, I had the same problem, my RAID0 volume was still gone.

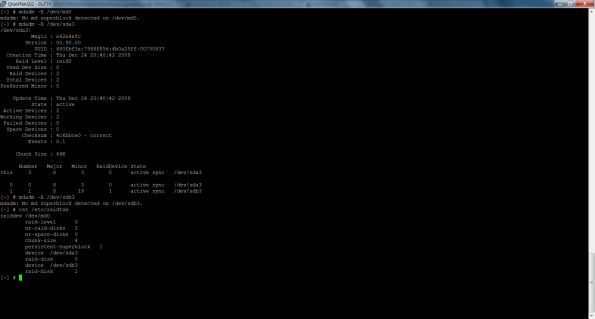

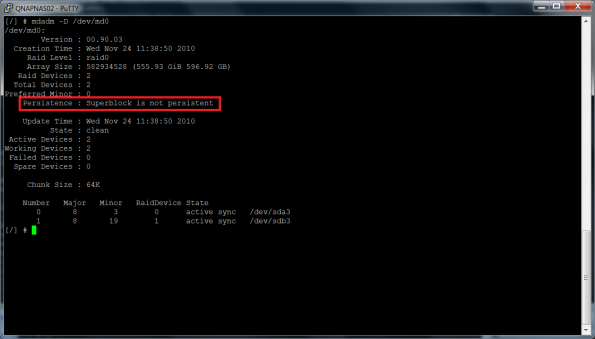

I SSH’ed in the QNAP device and triggered some mdadm commands as shown on the screenshot below.

mdadm -E /dev/md0 confirmed the issue, no RAID0 volume even though I did a restore of the QNAP’s configuration settings.

Whilst mdadm -E /dev/sda3 showed me that a superblock was available for /dev/sda3, that wasn’t the case for /dev/sdb3. That’s not good at all ![]()

/proc/mdstat confirmed that the restore was useless, no /dev/md0 declared in that configuration file…

Well the restore was partially helpful. Look at the screenshots above, in the logical volumes panel, you see that a stripping volume containing disk 1 and 2 was declared but not active. And on the second screenshot, the striping disk volume was unmounted as a result.

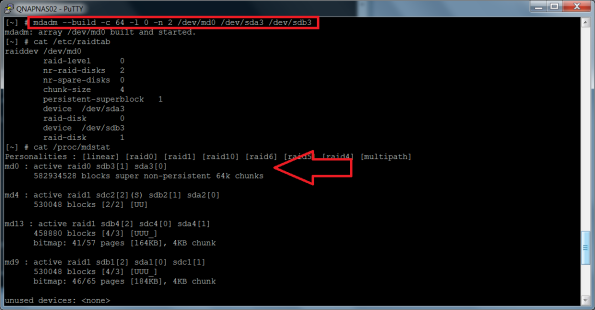

I tried to re-build the stripping configuration with the command: mdadm –build c 64 -l 0 -n 2 /dev/md0 /dev/sda3 /dev/sdb3 and /dev/md0 was successfully appended to /proc/mdstat

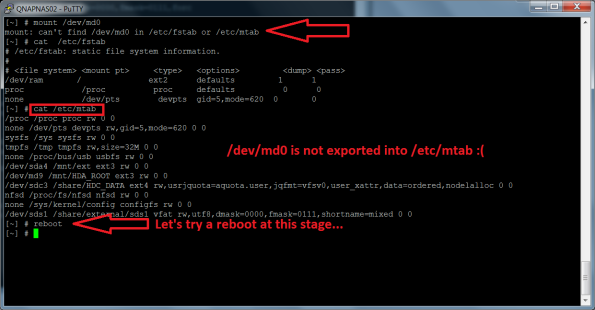

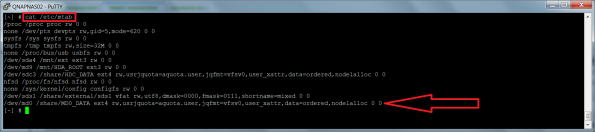

I tried a mount /dev/md0 but that did not work out. I checked /etc/mtab for /dev/md0 but could not find it. It was not exported as I would expect… At this stage I decided that a reboot was necessary…

The device was back up and I checked in the Volume Management panel for the status of the striping volume and it was still not active but this time the File System column showed EXT3. The Check Now button might help to recover the striping volume to a healthy state, let’s try that and indeed it was a success as shown on the two screenshots below. The Check Now button fixed the /dev/md0 entry in /etc/mtab and the status was now as active. Hurray!

Unfortunately the happiness was short because as soon the QNAP device rebooted, the striping volume configuration settings were gone. Actually I could see that the superblock, that is the portion of the disks part of a RAID set, where the parameters that define a software RAID volume, was not persistent, that is was not written in a superblock as shown in the screenshot below.

I had to face it, I would not be able to recover my striping disk volume thus I decided that it was time to re-create it from scratch and copy back my data. So I cleared any RAID volume settings from the device and while at it, I decided to evaluate the sweet spot of the chunk size especially for a RAID0 volume.

That reminds me that even if technology is getting better and better, still it is not error free and shit happens. Hopefully no data was lost for good and I could get my home lab back up with an even faster RAID0 volume as before the crash, thanks to the new chunk size ![]()

Obtained from this link

Los comentarios están cerrados.